At SMX Advanced, Matt Cutts told Danny Sullivan ON CAMERA that links would be an important search ranking factor for some years to come. He chose the empassioned answer and tried to explain some of the issues surrounding the enormity of the Internet, but stopped short of giving the whole, geeky answer.

We thought we would expand on his comments. When he talks about “the enormity of it all” he leaves you hanging a bit. We think we have some concept of what he means.

The truth is that in order for you to know WHICH page is the most relevent to return for any given user query, you not only need to be able to crawl and index all the content, you also need to be able to know how to order the data set at your disposal. It turns out that is HARD, because most signals are disparate and not easy to compare side by side. Certainly, some signals can influence rankings significantly in certain situations. For example, if you are positively identified as being located in Charlotte NC, USA, the chances are, when you type in “Chinese Takeaway” you are not looking for our favourite lunch haunt in Birmingham. However, it is not a universal signal and not always appropriate.

As Matt Cutts says in the interview – paraphrasing the immensely influential Probability Drive theorist, Douglas Adams, “Space is Big, you have no idea how vastly, hugely, mind bogglingly big space is”. Then in his own words he says “You may think a walk down to the chemist is long, but that’s peanuts to space, and the fact is the web is like that”.

He is right. He gives an idea of that scale when he says that the Library of Congress has 151.4 million items which – when scanned through OCR is around 235 TerraBytes of text. For the biggest library in the world – 235 TerraBytes is all it needs. Here at Majestic – in order to crawl the whole web, and JUST record the link data, we have to measure our combined storage in PetaBytes, even before we include any storage used by the crawlers themselves around the world.

Matt uses an example to demonstrate a point – that when you start to use other signals, you really are not going to see a holistic metric for a while to come. When the suggestion is that social is going to take over, or that links are dead, Matt points out that it is premature to reach that conclusion and suggests that links will be around as a significant factor for some time to come yet. He points out that when you look at the number of links on the web that are no-followed, it is a single digit percentage. You can see that easily enough for yourself on Majestic SEO by looking at any website’s headline link stats. MattCutts.com for example has a higher nofollow count than most at around 12%. CNN.com seems to be less at 1%.

There is another challenge though – in the ability to store, assimilate and importantly retrieve large amounts of disparate data points at scale. Let’s say Google does indeed manage to assimilate us all and we have a Google profile for every person on the planet. That’s say 50 MB of data per person – which is pretty conservative if we include an avatar.

So that’s a pretty straightforward calculation 50,000,000 bytes X 6,973,738,433 = 348,686,921,650,000,000 bytes. that’s 348,686 Terra-bytes. Now that is doable, but the interrelationships between these 7 billion people is a wholly different scenario. When we looked at including internal links into our Flow Metrics, our link count increased by 800%. The relationship between – say – Dixon Jones and Matt Cutts – is complex. Are they personal friends? Well no. But have they played cards together? Well yes. Do they have personally have friends in common? Hell yes! Would Matt Cutts trust a post from Dixon Jones? well… hmm… about as much as Dixon would trust one from Matt, less a bit, I expect.

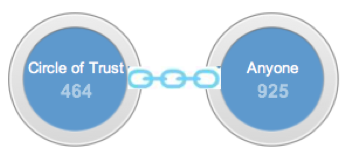

So then (and here’s my point) when Dixon Jones searches the internet for “Douglas Adams Quotes” there are a lot of them out there. Clearly Matt Cutts has some to hand (I doubt in context it was accurate, but Matt – if it was then the odds were EXACTLY 1 million to one that it was, as Terry Pratchett could tell you). But there are a lot of Douglas Adams quotes to be had. 30 million pages of them according to the all wise one. How are you going to order 30 million results about Douglas Adams quotes based on the views and opinions expressed by a few thousand friends or friends of friends in your Circle of Trust? How many of them have an opinion on the subject? – and if they did, I have as many friends that think Douglas Adams should be burnt at the cross as a heretic as have actually worked out the ultimate question. (The ultimate question needs you to read the books – but the answer is, as you may know, 42.)

By contrast, links win as a ranking signal by virtue of their ubiquity and relative ease of classifying and ranking. A link only pertains to a relationship between two entities on the web and this gives the link context. The number of links pointing into a url and the relative strength of those links give the content itself strength in that context. In short – it’s easier to retrieve this data FAST, out of a set of 30 million candidates – than it would be to order the views of a few thousand friends who may or may not have an opinion on the subject.

- How Important will Backlinks be in 2023? - February 20, 2023

- What is in a Link? - October 25, 2022

- An Interview with… Ash Nallawalla - August 23, 2022

The Alternative to Backling based Ranking would be some sort of artificial intelligence wich is able to analyse the quality of the single pages and ranks them accordingly. Or, some text analysis using quotations and paterns in Text to see how often a topic or an other website is mentioned on which domains in the web. This might be more precise than Link-Pagerank. Algorhytms to do this are allready availible i think. But analyzing trillions of webpages this way would require by far to mach computation power, even for google. But in 5-10 Years CPU Power might become cheap enough to do such things. Next level then would be Pschyhistory, to not only predict the Rank of a site but also to forecast the future: http://en.wikipedia.org/wiki/Psychohistory_(fictional)

July 25, 2012 at 3:32 pmThe problem is that all on-page content can be copied and there can be millions of pages with exactly the same content, how would you rank that without some external factors?

July 25, 2012 at 4:56 pm> Links were the first major “Off The Page” ranking factor used by search engines. No, Google wasn’t the first search engine to count links as “votes,” but it was the first search engine to massively depend on link analysis as a way to improve relevancy.

Today, links remain the most important external signal that can help a web site rise in the rankings. But some links are more equal than others….

August 10, 2012 at 11:44 pmNice post Dixon. I agree that links will remain an important ranking factor TILL someone comes out with a better ranking factor. It will be quite interesting to see another factor running parallel to links in deciding the rankings of a website.

July 26, 2012 at 10:32 amI would have to agree that links are not going anywhere anytime soon. The way they are ranked has changed but the need for them has not. Now it is seems to be more about not overdoing the links and keeping the anchor texts in the right percentages. MajesticSEO is the best tool I have found that helps me do that.

July 30, 2012 at 9:04 pm“Would Matt Cutts trust a post from Dixon Jones? well… hmm… about as much as Dixon would trust one from Matt, less a bit, I expect.” lol 🙂

Happy to hear that links will continue to count, however, I think that social factors is slowly taking over, they are the best prove that your site offers quality content.

August 2, 2012 at 3:51 pmI just found this article and I am glad I did! I am an account manager for Advance Digital, which works with its news websites to sell SEO and SMO services in local markets. I help our reps with preparation, research, and sales calls, so you could say it’s my job to talk about link building and on-page optimization. I find myself answering this question several times per week – “How important is link building to showing up better in search, and is there an honest way to do it?”

August 7, 2012 at 5:23 pmWe all know the answer is “very” and “yes”, but the explanation above explains it so well. For local businesses that may not have a budget to hire a content team and produce outstanding and interesting feature articles or similarly excellent content, it is often sufficient to have well-optimized pages, a consistent local presence as seen in directories, and a healthy, diverse group of backlinks that are built up honestly and gradually over time.

I would say that social links will certainly be more of a factor in areas which are more social, however always going to be many types of websites where social networking not overly appropriate, these are the areas where traditional links will be a factor for many years.

August 9, 2012 at 5:59 pmIt is not easy to imagine another way to count “votes” on the internet without using links in some way.

Since many people knows what it takes to rank high in the Serp, some people work hard to manipulate the system to their advantage. I think one problem is the anonymous reality of the web. Perhaps, in some way in the distant future there will be a bigger focus on identifying the persons and organisations behind a link, and let them carry the Trust/link vote, rather than an anonomous URL.

August 14, 2012 at 2:34 pm