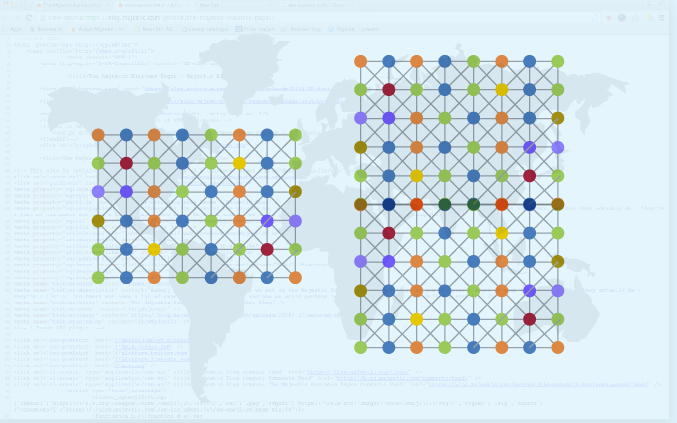

Majestic’s Fresh Index currently boasts a whopping 354.72 Billion (unique) URLs crawled. This number shows a dramatic growth rate, being more than double the size it was in September 2016. The Majestic Index developed steadily because of some technical wizardry. Here’s the obligatory screenshot.

Majestic Crawled Index

Before Becoming Too Excited

The magnitude debate (Mine is bigger than yours) will probably rumble on forever, but sometimes obfuscates underlying data. Majestic can be proud and fortunate to have one of the largest indexes of the web anywhere in the world. Leave alone amongst the Linkerati tools. Majestic acknowledges that size is not everything, however. The way link-counts are calculated is surprisingly complicated, as this old article on Moz demonstrates. Is a Blogspot site a subdomain or a root domain? Is a 301 from page A to page B a single link, or should every link from Page A now be added to the underlying numbers for Page B?

Everything is a compromise at scale. Different technologies select different operational decisions to these questions. Consequently, Majestic certainly does not always report the highest link counts. However, Majestic makes very good use of their Index and currently, Majestic Flow Metrics are hard to equal for quality. Majestic also remains focussed on their link graph to develop insights and therefore avoids rank checking or estimating traffic levels. The upside to that approach for consumers is Price. If you want great link intelligence, Majestic has it at the lowest entry price of any major data source. The best advice probably remains – multiple data sources for links in your SEO Tool set is probably much better than just one.

Clearly none of your Link Intelligence providers are standing still, which is great for SEOs. It does now look like the different tools are beginning to develop different strategies, though. Points of Differentiation are incredibly important for a healthy market.

Some insights into the technology employed to Achieve these results.

Not being a Tagfee company, I am not quite at liberty to give you as much of the detail as the Moz team would, but I can tell you that this did not happen overnight! We didn’t just add a bunch of servers and things doubled. Instead we had to look at the entire data stack. Being a distributed crawler, Majestic can generally crawl fast – but there is a difference between crawling quickly and crawling intelligently. Our Flow metrics certainly help to crawl more intelligently than if we didn’t have them, but there is a cost to that… the Metrics need a huge amount of crunching to work out. You first need to see the whole picture before you can start to do the maths. This means that much of the CPU work is not in the crawl, but in the analysis ready for the next crawl. We were finding that there was so much spam in the bottom end of the funnel that this was significantly hampering the entire process. If SEOs in the west think they understand Spam, you probably haven’t looked East… The new TLD systems have created new nuances and challenges which I imagine all search engines are having to grapple with.

Oh – and the Historic Index just went over the Trillion Mark!

As this post was being written, our Historic Index was also updated. Coincidentally, the crawled URLs in that index has gone over the Trillion mark. Here are the stats for the Historic Index now… Which you’ll notice have grown since the screenshot this morning:

- How Important will Backlinks be in 2023? - February 20, 2023

- What is in a Link? - October 25, 2022

- An Interview with… Ash Nallawalla - August 23, 2022

Hi Dixon, Thank you for these insights 😉

Wouldnt it be possible to use the Majestic Data to detect spam ? Lets say spammy links in emails or building a browser plugin that is going to block spam/low quality sites.

January 28, 2017 at 11:11 amFor sure this is feesible. I had not thought about the email angle though. I suspect that most email links go through tracking URLs which may have zero trust and citation flows anyway. We yould need to parse the links in your email client to get the full endpoint after all the redirects and that would open us up to questions about your security, which we take pretty seriously. Emails are pretty personal place to be putting our technology. A browser plugin already exists, but at the moment it does not check links on the page, only the flow metrics of the URL you are currently on. It is a very inteesting idea though, which I have thought about before, but not yet had spare resources to implement. Thankds for the comment.

January 30, 2017 at 11:30 am