Over the last few months, our engineers have been working really hard on improving our crawl methodology. Previously, our crawlers (we have hundreds) used to crawl links relatively randomly, with the emphasis being on collecting as many new URLs as possible.

Over the last few months, our engineers have been working really hard on improving our crawl methodology. Previously, our crawlers (we have hundreds) used to crawl links relatively randomly, with the emphasis being on collecting as many new URLs as possible.

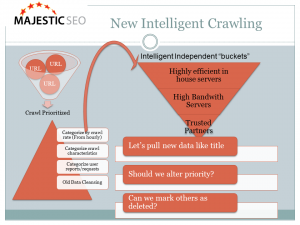

We think we have won the “mine is bigger than yours” debate. Now we want to not just crawl faster, but also smarter. We started this process by starting to categorize URLS, based on things like how frequently the page needs crawling based on the referring domains count and whether it is a homepage or inner page. In addition we can mark up sites that seem to be especially hard to crawl, so we can give them their very own category.

Soon we will also be able to categorize URLs based on user requests moving forward, so that if you have requested a report on a site’s back-links, we will then be able to prioritize the re-indexing on the main back-links, ready for the next update. We have not fixed a date for this new feature, but we are looking at a relatively short event horizon.

Next we have gotten smarter in choosing which servers and crawlers index which “bucket” of URLs. Where we have problems indexing a particular domain, for example, we might route these crawl requests through in-house servers, where we can monitor the crawl very closely and keep our worldwide servers for the stuff we think will not cause any issues. Each server also has, historically, crawled with different amounts of bandwidth, so urls that need crawling most frequently can perhaps be routed to our highest bandwidth servers.

This now means that the most important pages on the internet will be crawled as often as every hour! That’s a truly industrial scale, and whilst few pages need that sort of treatment, more and more pages will seem to get recrawled more frequently, because we are responding – at an algorithmic level – to user demands.

We are also getting more intelligent about the crawl itself. We are already now pulling the page title as well as the anchor text of a link when we crawl, but there are a few other things that we can do now during the crawl. The first is to make a determination as to whether we need to crawl the page more frequently (and no – changing the time stamp on the fly won’t make a difference! First and foremost you will need pages that are important anyway). The second is that we can now make some heuristic decisions when we find a page or link has disappeared. If the page times out, it has probably been deleted. If it has been deleted, then all the links previously on that page are also effectively deleted. We mark these as such on the fly, without even trying to re-spider those links. Over a few indexing cycles, this should dramatically improve the quality of the index.

- How Important will Backlinks be in 2023? - February 20, 2023

- What is in a Link? - October 25, 2022

- An Interview with… Ash Nallawalla - August 23, 2022

Great work in getting the information to us with accuracy. I like the idea of categorizing URLS. Your are definitely the leaders in the field of reporting links. Keep up the good work, good information is hard to find.

John

December 19, 2010 at 3:39 pmSEO Expert and Marketing Strategist