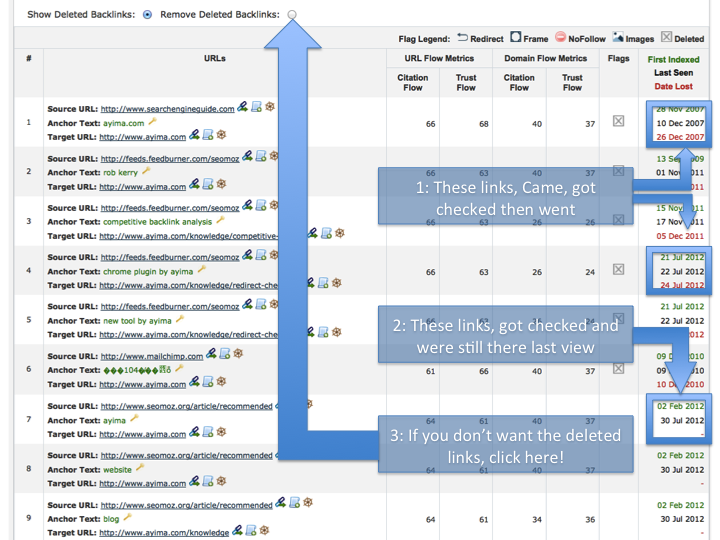

We feel we have the cleanest link data commercially available, (unless you subscribe to an extra service that re-crawls links like ours on demand). So we get surprised if anyone reports that our Fresh Index has large numbers of dead links that are unreported. It turns out this is usually due to a misinterpretation of the data, so to make it totally transparent, for every link, we now list the dates our crawler first found it, date we last saw it and… if the link disappears, we’ll also tell you the date the link was lost. The dates are relative to the index used (fresh or historic).

This should pretty much make everything clear, transparent and as easy to filter as possible.

The recent date functions are available it both the Fresh Index and the Historic Index. So here’s how it works:

We know there is baggage in our historic data, which is why we built the Fresh Index in the first place. EVERY link in the fresh index has been seen within the previous 60 days and most are checked much more frequently than that. We think with this level of detail, we hope the UX is intuitive enough – but do let us know in support if we can make it any slicker.

Because we found ourselves needing to do a sanity check on our data – we found ourselves having to verify that our Fresh Index reporting of one particular site was indeed accurate to within a reasonable tolerence. This means that over last weekend we found ourselves re-crawling 824355 backlinks to SearchEngineLand.com. As if we didn’t have better things to do.

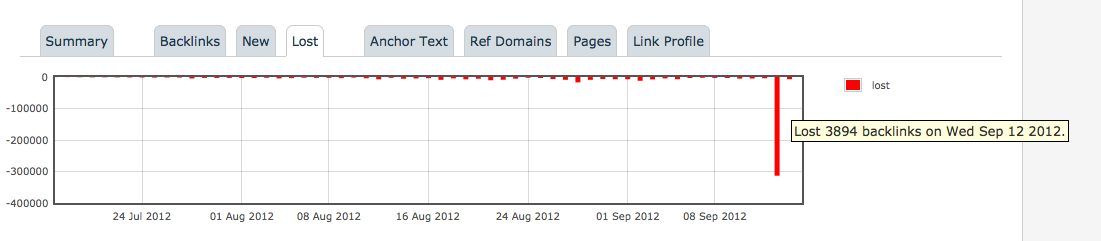

As a bi-product of this slight diversion, we have a big spike in the number of LOST links found in our brand spanking new “Lost Links” graph apparently “Lost” by Search Engine Land on a single day. We would like to sincerely apologize to Search Engine Land for any minor misconceptions this may cause, as in a typical day, SEL loses maybe 2-5000 thousand or so links and tends to accumulate many more. This is natural as sites use SEL’s news feed as a primary news source. As the news stories come off the front page of news sites, so do the links.

Recrawling data in range of ~1 mln links requires pretty good technical capability which we happen to have. Alex outlined the full process and data files for anyone to check the data for themselves:

Step 1: Search for searchengineland.com in Site Explorer

Step 2. Create Advanced Report for searchengineland.com in fresh index using our system, download all data using default settings to exclude known deleted links, mentions but allowing links marked as nofollow (we’ve created download file with data here: http://tinyurl.com/majesticseotestfile1 – 25 MB compressed )

Step 3. Extract unique backlinks from that big file (download:http://tinyurl.com/majesticseotestfile2 )

Step 4. This is where one needs to recrawl nearly 1 mln urls, parse HTML correctly, build backlinks index to extract data again in order to check how many links still present – competent level of TECHNICAL EXPERTISE REQUIRED! 🙂

Step 5: We used our newly built index with this data to create same CSV file as in step 1 (download file here: http://tinyurl.com/majesticseotestfile3 )

Step 6: count number of unique ref pages in the file above (download results here –http://tinyurl.com/majesticseotestfile4 )

(in case anyone was interested, the final ratio of unique LIVE links still present turned out to be = 91%!)

I hope the clarity of showing every “First found” date, Every “Last seen” date and – where it has been deleted “Link lost” date will save everyone having to go through such a tortuous process themselves and that these dates will help to clarify that our data is unquestionably clean. We feel this is about as legitimate and transparent as we can get.

Of course, even 91% is not perfect for everyone, so we have a number of partners who have built significant added value on top of our link data. Some of these partners recrawl the source URLs and not only verify the links regularly, but can also index the content or other areas of the page. Example partners are rotated here. Majestic SEO, however, remains the Largest, Fastest, Freshest and now Cleanest full link map on the Internet.

- How Important will Backlinks be in 2023? - February 20, 2023

- What is in a Link? - October 25, 2022

- An Interview with… Ash Nallawalla - August 23, 2022

Dixon

Thanks for the post.

As you know, we have several hundred clients using your data. It’s our view that the Fresh Index has improved the quality of your data significantly.

Listing the dates when you last ‘saw’ a link will be a really useful indicator too. Thanks for adding that.

Justin

September 24, 2012 at 1:44 pmCEO, Wordtracker

You are welcome Justin. I hope you’ll be able to use this in Wordtracker (if you aren’t already!) As you know, we try to maintain an aggressive development plan. Things don’t always go as smoothly as we would like, but I think we do pretty well, given the scale of the challenge.

September 24, 2012 at 6:07 pmListing the dates when you last ‘saw’ a link will be a really useful indicator too. Thanks for adding that.

September 25, 2012 at 4:57 amDoes Majestic include whether a link is cached in google? Would be good to know which links/sites are de-listed that is all.

September 25, 2012 at 10:59 amNo. Scraping Google is against Google’s terms and therefore potentially an Intellectual Property violation that might result in legal action. Sorry.

September 25, 2012 at 11:44 amWould love to know your bandwidth fees? Crawling the whole web cannot be cheap. Anyway I find your data better than ose by far. Also results returned far quicker. I find their data is really old and out of date occasionally.

The only thing I am missing is a page rank checker on the links. That would just be the dogs danglies.

September 25, 2012 at 3:10 pmWe think OSE has strengths that we still aspire to, but thank you. However, getting SERP data from Google at scale is not something we can do whilst maintaining our transparency and legitimacy. Google forbids SERP scraping. I know people do it, but not without long term, potentially legal, consequences I expect.

September 25, 2012 at 3:55 pmDixon how about the pagerank thing? PR is not serps.

If it is forbidden I am not sure how sem rush gets their data. Their tool is excellent also.

September 25, 2012 at 8:12 pmAgain, we would not want to use PageRank. In actual fact, Citation Flow correlates surprisingingly well with Page Rank, but we feel that our metrics are more advanced than PageRank. PageRank is, after all, nearly 20 years old. I feel a blog post coming on explaining why Flow Metrics are more important than Page Rank… 🙂

September 26, 2012 at 9:34 am