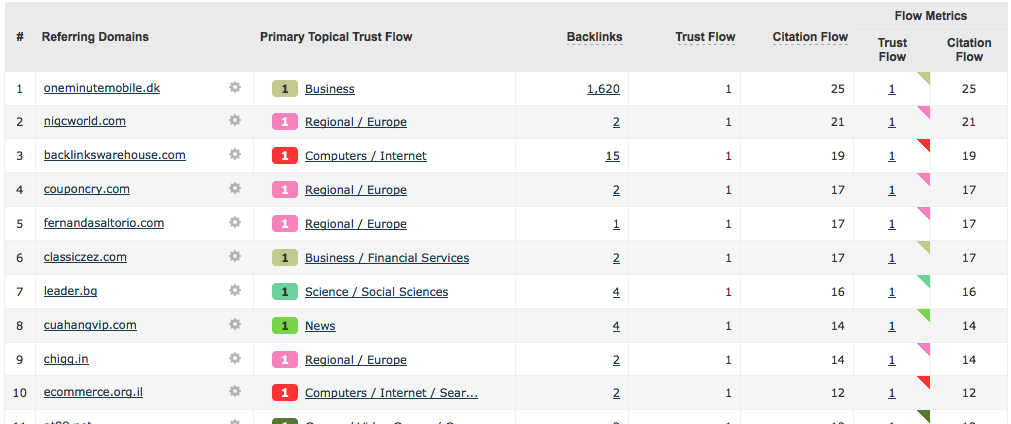

This is an incredibly easy way to get a list of spammy domains that link to any site. It’s a new feature, since it was only possible since we improved our sorting filters and integrated our Top Backlink functionality. Here’s what we think is a list of spammy looking domains that link to our own site right now:

Easily get a Spam Finder list for any site…

It takes 20 seconds with a paid account:

1: Type in the domain you want to find spam for in the home page and hit enter.

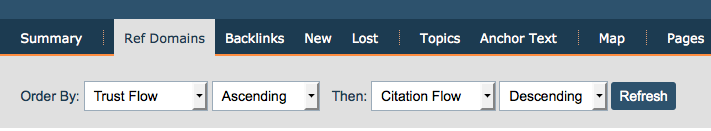

2: Click on the Refer Domains Tab

3: Sort the list by Trust Flow Ascending then Citation Flow Descending. Like this:

4: If you click “Download Data” you can also export this list to Excel or CSV formats.

Try it on a site or two… does it work for you? If so, could you share it on your favorite social network?

Why does this Spam Finder tool work?

Sites with low Trust Flow but High Citation flow exhibit a particularly unusual link profile. These sites tend to have links… which used to be a seen as a string signal by search engines… but these links turn out to be coming from anywhere EXCEPT sites that are trustworthy. In a random world, Citation Flow and Trust Flow tend to converge at scale. If they don’t, that is a red flag and generally with good reason.

- How Important will Backlinks be in 2023? - February 20, 2023

- What is in a Link? - October 25, 2022

- An Interview with… Ash Nallawalla - August 23, 2022

Thank you for the advise. I tried this method on my sites and it works. I suggest to add a filter that allows to remove nofollow and deleted spam links. So that we can focus our efforts only on the links that can really penalize our site.

April 23, 2015 at 10:12 amHi Alexandre,

April 24, 2015 at 12:36 pmthe fact that a link is listed as deleted in Majestic does not imply or infer that it has been detected by Google as actually "deleted": there are millions of pages on the web Google rarely visits and we can assume that a page with low trust flow and high citation flow (=a potential spam page) will not be regularly visited by Google. This means that you need to know and take into account and take pro-active measures to clean your backlink profile also for such links: this is the only way you can be sure that link will (sooner or later) be removed by Google.

Thanks for this tools. Can you give 1 or 2 pro active measure(s) one can take to handle spam links?

April 28, 2015 at 6:34 amThanks victor. We try to be custodians of the data, not consultants – but realistically your options are to disavow them or ignore them. I think that most of them are generated through some automated process from the past. This will make requesting takedowns by site owners highly ineffective, but if you do this, use the comments in your disavow file to document your efforts, with dates. Also – I would generally only disavow by domain except in very isolated examples. Links can easily change which URLs they reside on as blogs and sites develop, so if you were to disavow by page, you might find the effect short lived. If the page on your site is now irrelevant and burnt by negative seo, you might try a 301 onto the perpetrator, but that’s very risky at best. Then – at the high high ground of moral approach – there is a school of thought that says getting a few really REALLY good links can do a lot to dispel the effect of the poor stuff.

April 28, 2015 at 8:14 amToo bad you won’t find the links with no Trustflow value this way. Seems it filters out Trustflow with value "-" from this view, works when Citation is sorted as Descending as well.

May 15, 2015 at 11:18 amPerhaps you can modify your filtering data to interpret "-" (no value) as 0? Actually, it should be valued less then 0 when I think twice about it to give a correct sorting.

That’s technically more challenging (and potentially less useful) than you might imagine, Mathias. The ones with a "-" pretty much have little or no links, not "bad" links. That is to say, they are almost only ever discovered through internal site crawling. So the ones with "-" are so generic/formulaic/automated/poor that even indexing them in the Site Explorer list would just slow down the whole system when looked up in this order I think, so technically we don’t include them in the data set we are returning at all.

May 15, 2015 at 11:54 amYes that is true as you say Dixon, never the less I really like this feature.

What I’m really interested in seeing, with the ease of above sorting, is links/domains with 0 Trust and high Citation. Which it isn’t showing atm, unless you sort differently and sort of "backwards". It would be awesome if it truly would work as intended 🙂

May 15, 2015 at 2:48 pmOK. There should be a way to do this…. (Let’s hope this works for you…) Limsan wrote it up at https://blog.majestic.com/general/power-user-tips-advanced-report-filtering/ but basically, get and advanced report and then use the advanced filters to recreate it with just the data you want. The bit in that article that will help is near the bottom.

May 15, 2015 at 2:56 pm