There have been a number of product comparisons between backlink index providers ever since Majestic and Moz ( then MajesticSEO & SEOMoz ) transformed the backlinks intelligence market with their pioneering toolsets.

SEO and Affiliate marketer Matthew Woodward has generated significant debate in SEO & Professional blog circles for his “Majestic Million” showdown over the years – and his latest incarnation of this test appears to be no exception.

Majestic have, in some ways, made Matthew’s overall job slightly easier because the free to download Majestic Million has been used as the foundation for Matthew’s series of reviews – setting an unintended public benchmark for others to be seen to challenge. Whilst the public nature of the dataset appears to have given Majestic an automatic seat in the review, I do want to acknowledge the leg work Matthew must have had to do with other tool/data providers who don’t offer similar data sets to the Majestic Million to facilitate his comparisons.

Even in terms of relationship management, Matthew appears to have set himself a task of Herculean scale, and, to his credit, the results of his labours have provoked some debate amongst the community about the various facets of different tools.

It is very natural to want to compare backlink index providers, however, as Matthew has highlighted, comparison is fraught with difficulty. The purpose of this post is to share a perspective on some of the challenges in trying to compare the backlink tools.

A fundamental problem in trying to compare Indexes from different providers is the various players are likely to have developed their own, different approaches to building backlink indexes, which adds further complexity to a “problem space” that respected industry expert ( and Moz employee ), Russ Jones refers to as “not an apples to apples measurement”.

Why don’t all providers use the same algorithm to make comparison easy?

This post looks at at the backlink index comparison problem from two perspectives which I believe are pretty fundamental to the problem space. I am not the first person to highlight this – most recently, Russ Jones, and Ahrefs ( Ahrefs as quoted on Matthew’s article ) have separately highlighted aspects of the following issues :

- How long the data is retained for ( the backlink index providers use different retention strategies here )

- How the summary total counts are calculated

Even if all providers used the exact same algorithm to count index size, the numbers may still vary as a consequence of different indexes varying the time window of capture.

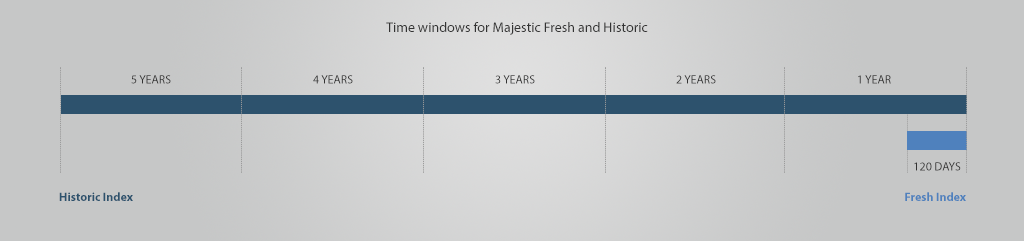

One of the benefits that Matthew’s article brings to the table is a better understanding of the time windows associated with the different providers. Why is this important? I’ll use the two indexes associated with Majestic to demonstrate the impact of time on index comparison:

Majestic are well known for their “Fresh” and “Historic” Indexes, which hold around 4 months and 5 years worth of data respectively. A motivation behind having two indexes is simple – the bigger the index, the longer the time taken to build it. The Customer benefit is a different matter – whilst both indexes can be useful for Link Audit, there are some customer use cases for which Fresh is better, and others where Historic is by far the better choice.

Recently, other backlink providers have tuned into the customer demand of running different Fresh/Live/New/Old/Historical indexes and tried to produce similar products to Majestic – separating Indexes into multiple products and letting the user choose which to query. However – the length of time windows in these indexes greatly impact the corresponding headline numbers associated with the product – Just because indexes from different providers sound like “Fresh”, it doesn’t mean they have the same time window.

From a time window perspective, Majestic Fresh ( on which Matthew’s review is based ) is around a 15th of the scale of Historic. No one would expect Majestic Fresh to compare directly with Majestic Historic, because the time windows are different. Ahrefs appear to make a similar claim in Matthews article – that comparing Indexes with different purposes, and hence different time windows adds a complexity to comparisons which may not be fair, and it is a point which I find difficult to disagree with!

Now we have looked at challenges with measuring indexes with different time windows, let’s look at the second dimension – that of the totals used to compare!

Backlinks counting – an early measure of page success?

One of the lessons coming out of Matthews series of articles that is easily acknowledged is: comparing numbers of backlinks is not a great way of measuring index size. Experienced SEO’s know that backlink quantity is pretty far down the priority list outside of link audit – a recurring theme over the last few years is a focus on backlink quality.

So why do backlink databases share backlink totals? There are perhaps two reasons – the first – a legacy matter – many, many years ago, pre-penguin, there was more focus in the SEO industry on backlinks quantity, and good independent measures of webpage popularity were hard to come by – so backlink counts was one of very few independent statistics available. Another reason backlinks counts may be important is that such totals can be an indicator of the number of rows of data in a backlink database.

Given how easy it is for websites to generate millions and millions of linking relationship, a view on what is a true and spurious link will differ greatly between the different algorithms used by different providers – and that’s without considering any impact of sanitising URLs on different use cases…

The ( questionable ) merits of Referring Domain comparison…

One might hope that counting referring domains should be far more reliable than backlinks – however – to count referring domains, one must first decide what a referring domain is, and there are unfortunately different ways to determine what makes a domain.

Majestic sets a high barrier when it comes to counting domains – focusing on domains where there should be a barrier to entry. Majestic has been in the backlinks business for a very long time, and their domain metric was initially designed to be a more trustworthy number to compare page popularity than backlinks.

Some providers take a slightly more liberal view of what a domain is than Majestic – there is nothing right or wrong about different definitions of what a domain is, but it is important to note that different domain definitions can result in large variations of ref domain counts. Like many of the other “counts” covered here, it’s important the person looking at a refdomain count from different providers appreciates some of the causes of potential variance.

What is a Referring Domain?

A referring domain is a backlink expressed as a relationship between two different domains. There appear to be different interpretations of what constitutes a “different domain”.

At Majestic, a fairly conservative methodology is used for domain name counting – Majestic try to focus on the domains people pay for using a relatively simple algorithm that recognises, for example, hostnames directly under public suffixes like .com, .org and a number or regional variations as domains. Other backlink providers may expand the definition of what they consider a domain to include “private suffixes” like blogspot.com and many, many others – so anyone with a blog hosted under the blogspot.com root domain counts as a referring domain. Other backlink providers may go further still and count all hostnames as referring domains – so a link which is repeated across de.majestic.com, fr.majestic.com and es.majestic.com could count as “3 referring domains” in hostname based counting!

A walk-through of this method follows – using the same seed set and different algorithms to produce different totals:

| Example Candidate hostnames list |

|---|

| www.bbc.co.uk www.searchenginejournal.com www.wikipedia.org www.searchengineland.com dollartanvir.blogspot.com ewlorettaparks642.blogspot.com seomining.blogspot.com de.majestic.com fr.majestic.com es.majestic.com |

We can now look at this data and apply different domain recognition algorthms to the same input data to produce different counts:

| Conservative TLD recognition | Domains Recognised when Including Private Domain names | Domains recognised by “All hostnames qualify” | |

|---|---|---|---|

| Algorithm produces: | bbc.co.uk searchenginejournal.com wikipedia.org searchengineland.com blogspot.com majestic.com | bbc.co.uk searchenginejournal.com wikipedia.org searchengineland.com dollartanvir.blogspot.com ewlorettaparks642.blogspot.com seomining.blogspot.com majestic.com | www.bbc.co.uk www.searchenginejournal.com www.wikipedia.org www.searchengineland.com dollartanvir.blogspot.com ewlorettaparks642.blogspot.com seomining.blogspot.com de.majestic.com fr.majestic.com es.majestic.com |

| Resulting Ref Domain Count: | 6 | 8 | 10 |

| Conclusion: | Typically Lowest Ref Domain count for same data. | Increased Ref Domain counts for same data. | Highest count for algorithms illustrated. |

Even though the raw data is the same size for each algortithm, the resulting counts might suggest that the “all hostnames” method produces two thirds more referring domains than the conservative method – even if the raw data is equivalent!

This does not mean that one method of counting ref domains is wrong and the others right – it just means that trying to compare the Ref Domains totals from one index with another may not be an “apples-to-apples comparison” because Ref Domain counts, like backlink counts, IP counts and Subnet counts, may vary between providers even if the raw data was identical due to algorithm differences!

IP/Subnet Counting

Russ Jones highlighted some of this matter, but here is my take in my own words: Whilst IP counting may feel like it should make for a clearer comparison than Ref Domain, there is still possible cause for ambiguity.

Here is an issue: An IP can host many websites. A website can be hosted by many IPs.

This issues means that a backlink index needs a strategy for IP counting – two of which are:

- Record all IPs found for a given website for a period of time

- Record the last IP ( or more correctly, a recently resolved IP ) for each website

Majestic go for Option 2 – capping the IP, and hence subnet count at a maximum of one per domain – other providers may go for the option of counting all IPs/subnets seen. Counting as many IPs as possible would boost headline counts but I’m personally not sure of the end user benefit – though no doubt, other people’s perspective may vary.

Domain based capping could be considered to be a route to resolve the differences in IP counting mentioned above – however, this approach requires caution – when IP counts are constrained with domain based IP capping, the domain recognition algorithm becomes hugely significant, as a conservative calculation of referring domains will intrinsically reduce any domain capped ip count produced.

Conclusion

Whilst it’s been some time since anyone from Majestic commented on Matthew’s experiment, it is hoped that this post highlights the scale of the challenge of comparing index size – not just on relative measures like backlinks, ref domains or IPs, but also because of the critical aspect of time windows on an index.

Whilst there are aspects of the backlink provider comparison blog post I would be uncomfortable to be seen as endorsing ( hence the lack of a direct link ), Matthew is to be congratulated for the level of debate generated around his articles, especially as new players enter the market.

Whilst some more exprienced industry figures may consider my post as stating the obvious, it’s important to note that the field of digital marketing is subject to constant change. It’s easy for some of us to take for granted the experience and skill we have built up over the years. This act of taking our own knowledge for granted can act as a barrier to new starters in our industry, or for those professionals for whom data analysis is a peripheral part of their workload. I hope this post can contribute to meaningful product comparison by shining a light on areas where there may be subtle but important product differences.

However – the most important comparison of modern backlink index providers does not feature here. All the main players on the market go far beyond lists of backlinks. Lists of backlinks are a little out of date without meaningful metrics with which to sequence and prioritise them.

The leading companies in this market perform significant analysis to produce meaningful metrics, such as Majestic Trust Flow and Citation Flow, or Moz Domain Authority. These metrics provide the background for more meaningful link evaluation than simple metrics based on basic arithmetic featured above.

- Introducing Duplicate Link Detection - August 27, 2021

- Python – A practical introduction - February 25, 2020

- Get a list of pages on your site with links from other sites. - February 7, 2020

Well written. There is another issue of robots.txt (https://www.joeyoungblood.com/link-building/theres-data-missing-from-your-link-analysis-tools-and-heres-why/) which can cause a different "shape" to the index. That is to say, two indexes might have the same number of links but very different counts per domain because robots.txt blocks different crawlers.

Unfortunately, there is unlikely to be agreement in our industry on certain issues like what should be considered a referring domain (if not for the fact that getting that right will make your proprietary metrics more accurate).

I stand by my career-long claim that if you need link data, you should look at all the major providers. Expensive? Yes, but that is the price you pay for accuracy.

September 18, 2019 at 2:23 pmThanks for the generous feedback Russ, and for the relevant third party link.

Like you, I also subscribe to the view that a "basket of backlinks providers" yields a thorough analysis. It’s a position I’ve validated by lurking quietly at the back of talks, listening to Agency leads describe how they use SEO tools in the wild.

September 18, 2019 at 2:55 pmExcellent post and appreciated the clarification and transparency. Curious where you feel accuracy falls into the equation. Not sure if you saw this little test I ran https://theupperranks.com/blog/best-backlink-checker/ but would love to hear your thoughts.

September 18, 2019 at 3:06 pmDear David,

Thanks so much for your comment, and request for feedback. I try to read as many comparisons as possible, but have to admit not hearing of yours until reading your comment.

As you will be aware, all tests have limitations, and I applaud the clarity in which you define the use-case for your "live links" test. I did feel that an area that could be a little clearer is a declaration of which index from each provider you are using for your test – Majestic Fresh or Historic? Ahrefs Live, Fresh, or Historical? Other areas to consider is if your test deprecated links marked as deleted prior to inclusion, and to which HTTP status codes represent a live link?

September 18, 2019 at 4:31 pmNot a problem, Steven and was just looking at fresh/live links, of course.

Would not have been a very accurate case study otherwise 😉

September 22, 2019 at 7:08 pmI will appreciate you for your an Excellent unbias post and your clear clarification. Google recently updated its algorithm which not counting</a> the numbers of backlinks on priorities and can also determine fack backlinks in this scenario I am Curious what the result your basket of backlinks providers fit in new algorithms or not? What was the accurate impact on SEO? I am not sure but love to read your comments. (Edit: Link Removed)

September 23, 2019 at 7:02 amHow long the data is retained for ( the backlink index providers use different retention strategies here ) (Edit: Link Removed)

October 6, 2019 at 6:23 pm