The Site Explorer is by far the most popular tool used on Majestic. That is closely followed by our Bulk Backlink Checker, a way to speed up analysing multiple links at once. Due to the popularity of the tool we decided it was high time for a little make over.

Oh, that’s pretty!

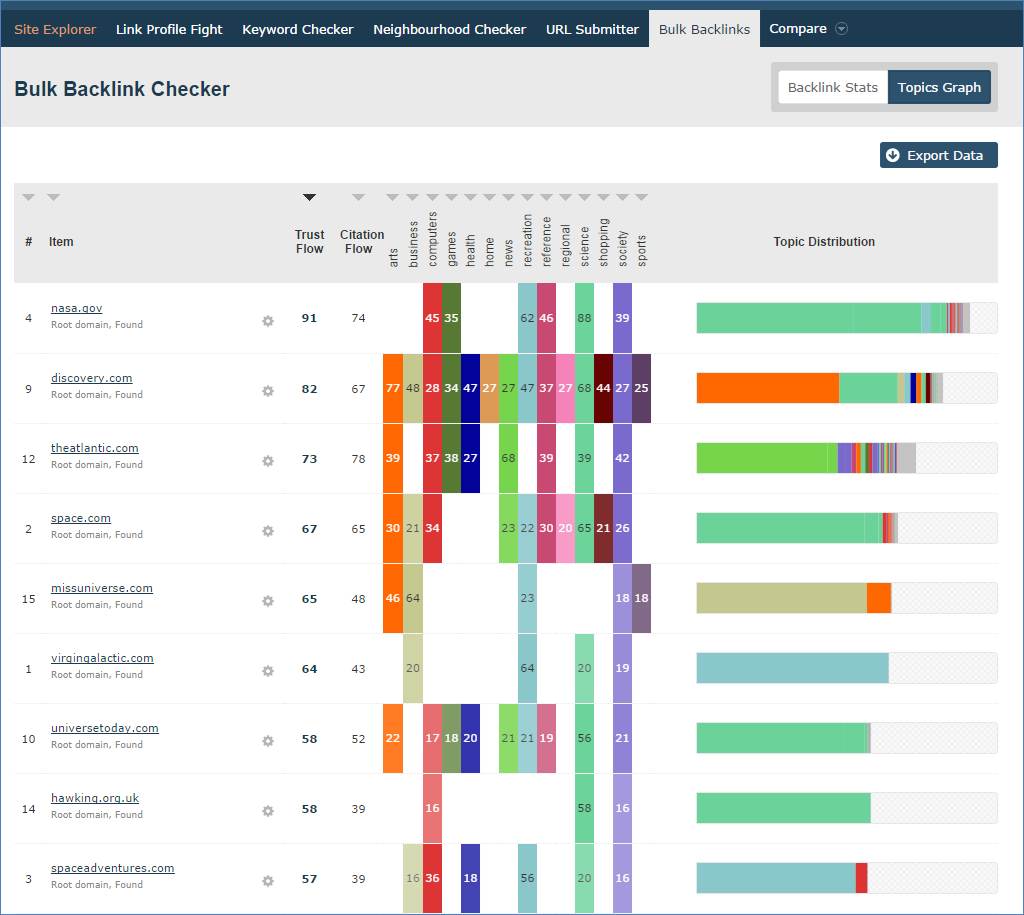

With the new Topic chart we have added, it has never been so easy to understand how we use the distribution of a sites topics to classify it. Easy to understand and clearly presented, you can now pin point the site (or URL) which is the strongest voice within your target industry. If you need to see the statistics instead, just use the option in the top right corner and change the view to ‘Backlink Statistics’ to see total backlink counts, EDU/GOV links etc.

What can I use the Bulk Backlink Checker tool for?

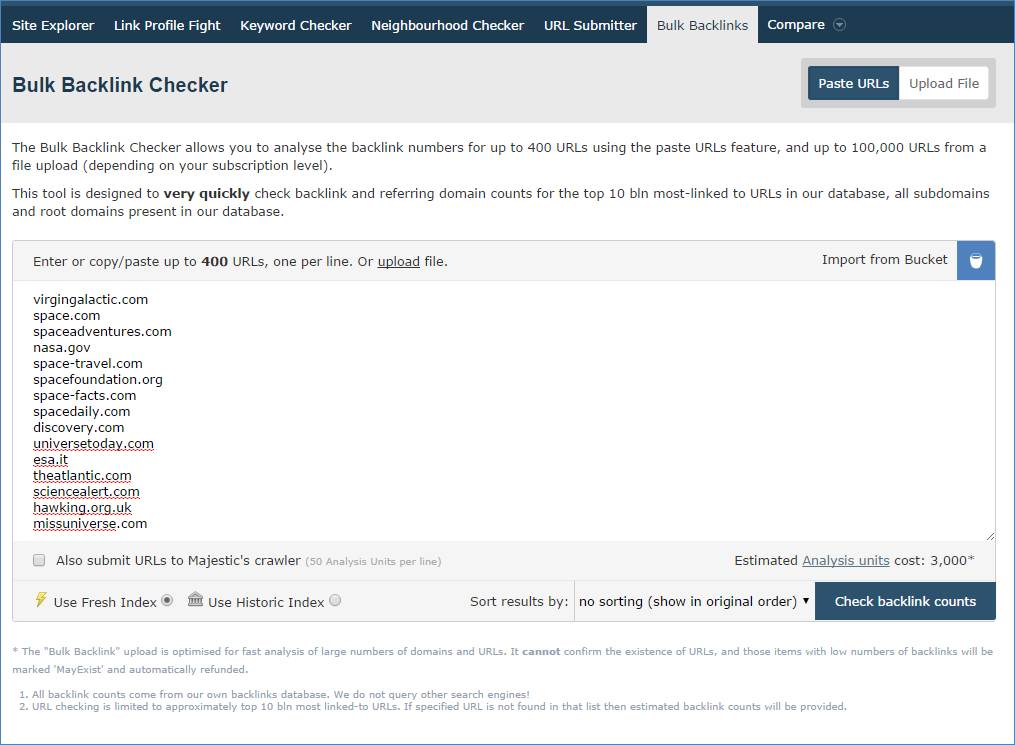

There are various uses for the Bulk Backlink Checker. The main use is simply to rapidly check important information such as Trust Flow, Citation Flow and link counts for multiple URLs or domains at once. Since the introduction of mobile app , you can use the tool for even more tasks, such as outreach, targeting or discovering new opportunities. If you need to check a list of links in bulk, this is the tool for you. Try comparing competitors, potential partners, all the pages on a single site or even Twitter profiles! With our #MadeInSpace season in full flow, we decided to look at the space industry by way of example:

What Else Changed?

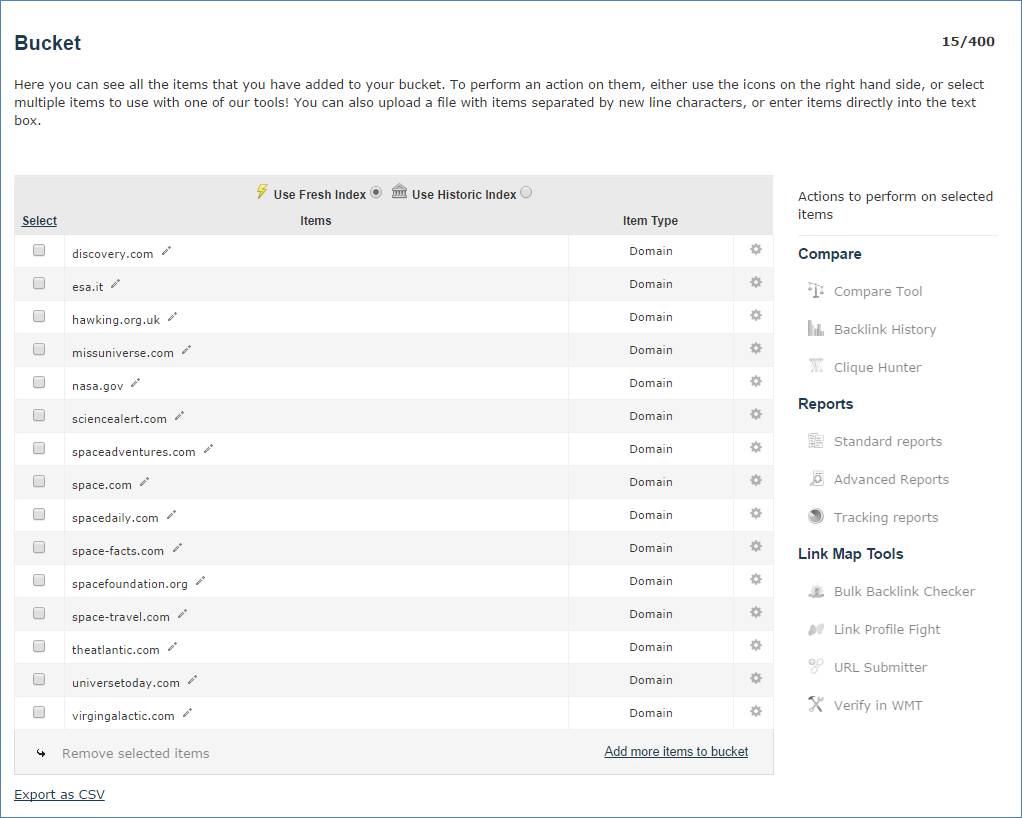

Firstly we decided to increase the amount of sites that can be added, it is now set at 400 URLs across all subscriptions. You can also import a list from your Bucket as well as submit URLs to our crawler. As an added surprise, we have also increased your Bucket space to 400 URLs!

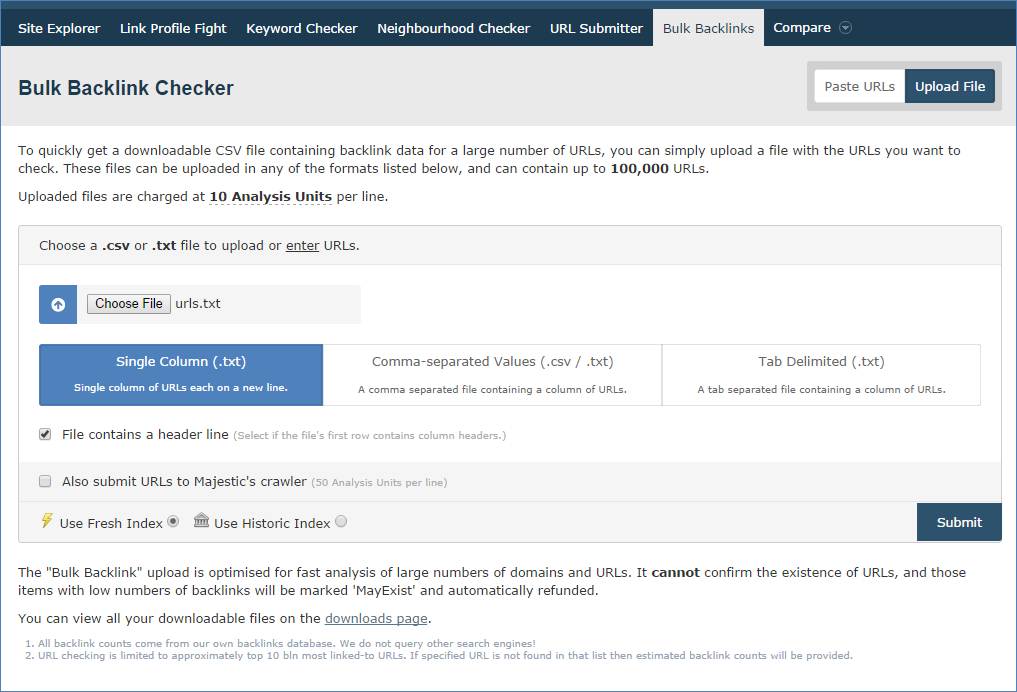

Just like before, you could also upload a file worth of links instead. You can upload up to 100,000 URLs to be exact. The tool accepts .csv or .txt files. (Please note that when you upload a file, this will take 10 Analysis Units per row of data. If you copy and paste, this will take 200 Analysis Options per item. If the item returns a ‘Not Found’ or ‘Estimated’ result, then you will not be charged for that item. You can keep an eye on your monthly unit usage by visiting your Subscriptions page.)

What do you think? Any good?

That’s what the comments are for 🙂

- New Bulk Backlink Checker Features - May 13, 2016

- Finding New Link Opportunities Just Got Easier! - March 16, 2016

- Were Your Lost Links Really Deleted? - March 15, 2016

As a power user, I am extremely disappointed with the update. Charging 200 credits for the same exact thing is ridiculous! I will be moving my account due to this, because it is extremely useful feature that you have completely ruined. Yes, I go through a lot of credits, but it’s all paid for – using the quick bulk check is avery useful function for me.

May 14, 2016 at 12:45 pmHi Michael. No user with the email address you supplied in your comment exists in our system.

How many Urls can you check on your subscription at 200 credits per time? Please also consider the upload method.

May 16, 2016 at 10:45 amThis is really nice template . Thanks for sharing it .

May 16, 2016 at 7:34 amGreat tool for quickly checking stats of expired domains, just remember to use multiple variations of the domains (www, http, none, etc) or you’ll miss out on a few golden nuggets.

May 20, 2016 at 2:08 amActually – a word of warning here. I agree that url vs subdomain vs root domain can uncover different data, but be careful never to compare (say) a URL trust Flow with a Root Domain Trust Flow. Trust flow (and CF) is calculated relative to the scope/level that it is search on. So a URL’s TF is compared to all the other URLs in the known universe, whilst a root domain is compared with all the other root domains in the known universe.

May 20, 2016 at 1:48 pmCongratulations for the great work. Your tool and articles are amazing!

May 22, 2016 at 11:30 pmWe have been using Majestic for last 5 year, My team members are so disappointed with the recent 200 credit charges. what the heck are you guys trying achieve? more revenue or kick out loyal customers?

May 31, 2016 at 3:48 pmNot sure if i am doing something wrong but when I export the results I have sorted on basis of TopicalTrustFlow business ranking them from high to low and then press export all the data is not exported in the same format ie. ranking from high to low.

May 31, 2016 at 8:41 pmDoesn’t make sense to me you may want to include this feature.

Majestic crawls my sites excessively. While I appreciate the attention, Majestic is using too much bandwidth. How can I get you to scale back without stopping completely?

May 31, 2016 at 8:43 pmHi Ernest. This is quite easy to do. Use the "Crawl Delay" syntax in your Robots.txt file

June 5, 2016 at 2:23 pmYou can easily slow down bot by adding the following to your robots.txt file like this:

———-

User-Agent: MJ12bot

Crawl-Delay: 5

———-

Crawl-Delay should be an integer number and it signifies number of seconds of wait between requests.

i cant see me coming back to you after these posts and unsatisfactory replies.

May 31, 2016 at 10:16 pmI stopped because you were over priced, saw this update, and I feel more justified in my decision to have pulled out.

Im sorry, but you can do better than this.

It lacks, it lacks…well its just not bloody dynamic is it.

This looks like some really helpful changes to your software. Looking forward to using it ;).

May 31, 2016 at 10:17 pmRip off with the 200 credits per line now. smh

May 31, 2016 at 11:06 pmThis is just brilliant!!! Thank you for the extra bonus for bulk backlink checker!

May 31, 2016 at 11:12 pmI ‘m want to check backlinks to this page [LINK REMOVED] to be able to develop SEO campaign . Ad can not help me finish it ?

June 1, 2016 at 3:15 amPlease set up a support ticket for this.

June 5, 2016 at 2:25 pmLooks to me like someone is trying to spam.

June 1, 2016 at 6:47 amWe look to block the spam, but not the legitimate comments. We usually won’t let links through though.

June 5, 2016 at 2:26 pmUnbelievable. I’ve used up almost all of my 5,000,000 analysis points in the last week, my subscription is now useless for the next 3 weeks which will severely disrupt my business. Quite simply, I can’t continue to use your services under these conditions so I’m looking for viable options elsewhere.

June 1, 2016 at 10:24 ami wrote a fair comment and you deleted it.

June 1, 2016 at 10:42 amsays everything

No – the comment is there – but I have been on holiday.

June 5, 2016 at 2:27 pmHi all – especially David and Martin in the Comments. Sorry for the delay in replying. I have been up a mountain in Wales with the kids and I am guessing nobody else wanted to tackle the harder comments!

You do not need to expend 200 analysis units per URL. If you use the file upload method, instead of the cut and paste method, you only extend 10 analysis units per url, making your account go 20 times further!

The cut and paste method is an "instant on demand" method, which is highly server intensive, whilst the upload method let’s us take a few more seconds on the analysis and run it in the background. You can also get up to 100,000 URLS analyzed at one time instead of 400 in this method, so it is much more scale-able.

In regards to the pricing, the fact is that we have the lowest cost of entry of any of the major link tools and we aim to provide more data and link insight for the money. We also do not charge units for using Site Explorer. To keep ourselves as the lowest cost but with the largest data allowances, we do need to make sure that the data usage is properly managed. We will continue to look at the stats after implementing this change as to what types of users are being materially affected, but we would rather not raise pricing to those of our competition as we do like having the strongest data at the lowest price for most users.

June 5, 2016 at 2:48 pmthank you for your reply, and I hope you had a good time on holiday.

June 5, 2016 at 7:18 pmWith respect, price is only an issue when quality is in question.

Thanks David. I would agree. I believe that Majestic’s Flow Metrics are the strongest on the market. I have seen a couple of third party research documents that support that view recently. Our objective here is to have the best metrics AND the lowest cost of entry. Which I think we currently have.

June 6, 2016 at 10:27 amif you were at $25 to $30 a month, then Id sign up

June 6, 2016 at 11:46 am@Dixon Jones: http://www.searchenginepromotionhelp.com/m/robots-text-tester/robots-checker.php says that your proposed solution is not valid.

User-Agent: MJ12bot

The line below must be an allow, disallow or comment statement

Crawl-Delay: 65

Missing / at start of file or folder name

Suggestions for a VALID solution?

June 8, 2016 at 5:05 amHi Ernest,

Try 20 instead of 65.

If you submit your website in a support ticket, we can take a look. It may be that the tool you are using for the test does not itself understand Crawl Delay, because Google itself does not obey Crawl-Delay (they make you throttle via their search console instead). It may also be a simple syntax issue. The protocol is described on wikipedia here:

https://en.wikipedia.org/wiki/Robots_exclusion_standard and is also used by Bing here:

https://blogs.bing.com/webmaster/2009/08/10/crawl-delay-and-the-bing-crawler-msnbot/

Our own crawler will documentation on Crawl-Delay and other elements is at http://mj12bot.com/

Dixon.

June 8, 2016 at 10:18 am